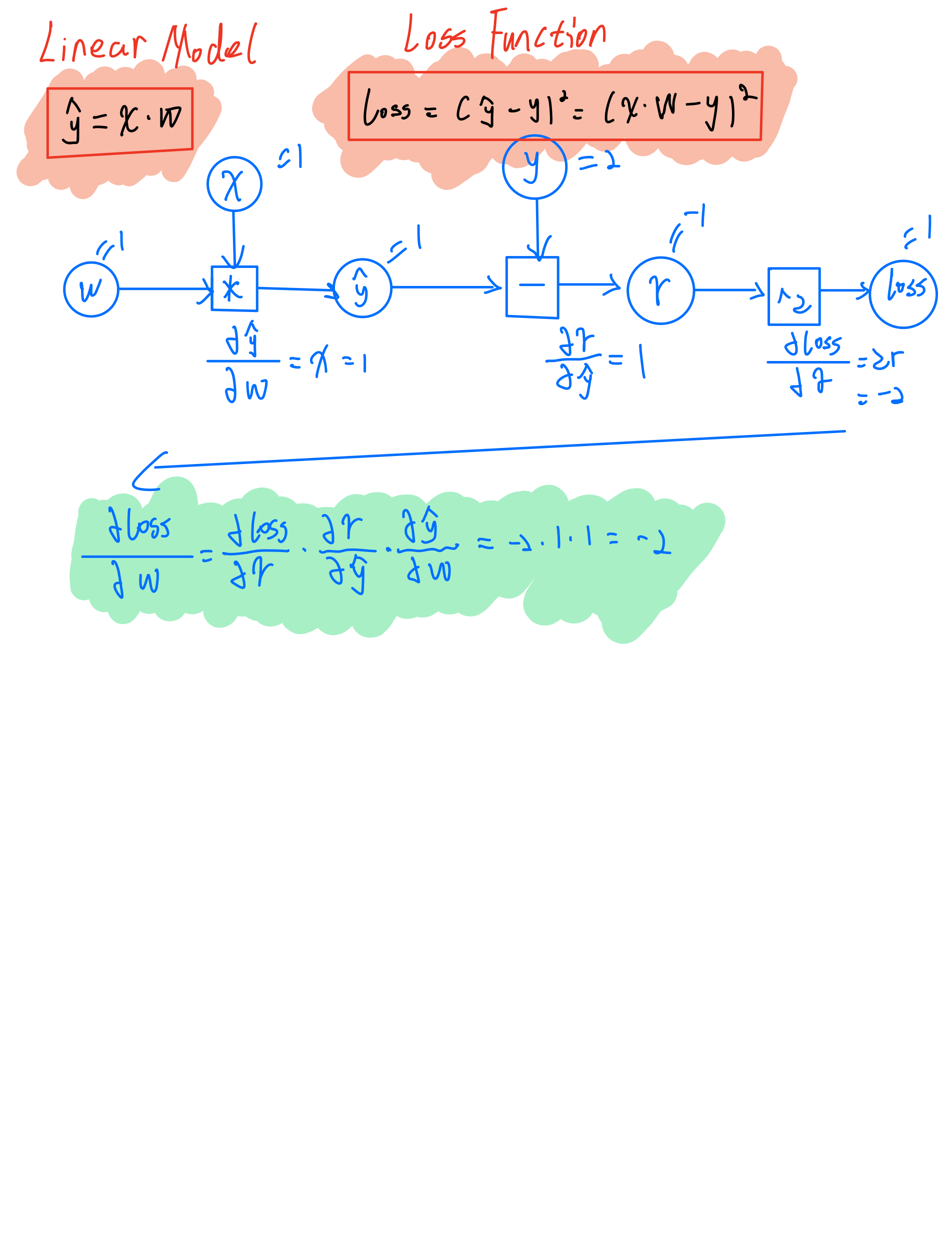

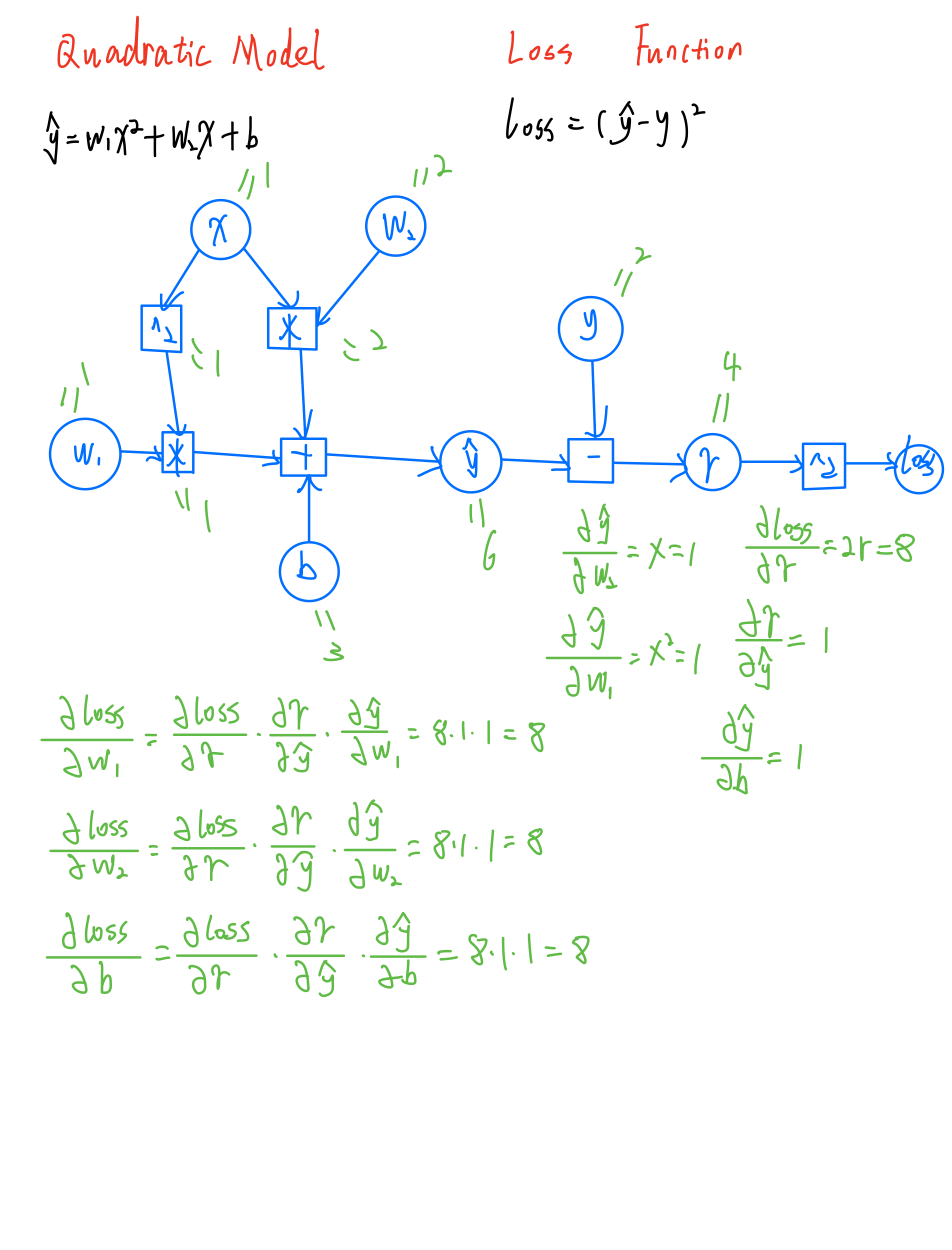

Back Propagation

如果遇到非常复杂的网络,是无法通过直接计算。但是如果把网络看作图,通过图传播梯度,就能把梯度计算出来,即反向传播。

$\widehat{y} = w_2(w_1 \cdot x + b_1) + b_2$

$\widehat{y} = w_2\cdot w_1 \cdot x + (w_2 \cdot b_1 + b_2)$

$\widehat{y} = w\cdot x + b$

可以发现,若直接在线形层上增加模型的,其网络的复杂程度依然没有改变。为此我们需要在每一个线性层之后添加一个非线性层(激活函数)。

Chain Rule

$\frac{\text{d}z}{\text{d}t} = \frac{\partial z}{\partial x} \frac{\text{d}x}{\text{d}t} + \frac{\partial z}{\partial y} \frac{\text{d}y}{\text{d}t}$

Tensor in Pytorch

Pytorch最基本的数据类型就是Tensor,Tensor可以存标量,向量,矩阵,三维矩阵,高维矩阵等;还可以保存梯度。

1 | # -*- coding: UTF-8 -*- |

1 | # -*- coding: UTF-8 -*- |